Bucket sort, counting sort and radix sort.

GOAL

To understand the difference and pros and cons of “bucket sort”, “counting sort” and “radix sort” and implement them.

Bucket sort

Bucket sort is one of the sorting algorithm that has “bucket” to put numbers in.

import random

import itertools

length = 50 #length of the list

input_list = [random.randint(0, 99) for x in range(length)]

#input: list that has 0~99 int elements

#output: sorted list

def bucket_sort(input_list):

bucket = [[] for x in range(100)]

for i in input_list:

bucket[i].append(i)

return list(itertools.chain.from_iterable(bucket))

print(input_list)

print(bucket_sort(input_list))#output [6, 27, 26, 38, 90, 80, 69, 14, 65, 53] [6, 14, 26, 27, 38, 53, 65, 69, 80, 90]

Complexity: time O(n), space O(r) as n is length of the list, r is range. In the worst case, space complexity is O(r+n)

Pros: Fast algorithm, stable sort

Cons: big memory, the range of input number should be limited.

Counting sort

Counting sort is one of the derivation of bucket sort. Create Bucket and put the number of “occurrences” in it. Iterate the input list counting the number of occurrences.

import random

length = 10

input_list = [random.randint(0, 99) for x in range(length)]

def counting_sort(input_list):

bucket = [0 for x in range(100)]

for i in input_list:

bucket[i] += 1

output = []

for idx, num in enumerate(bucket):

for i in range(num):

output.append(idx)

return output

print(input_list)

print(counting_sort(input_list))#output [84, 33, 72, 10, 31, 4, 4, 46, 89, 52] [4, 4, 10, 31, 33, 46, 52, 72, 84, 89]

Complexity: time O(n), space O(r) as n is length of the list, r is range.

Pros: Fast algorithm, fixed size memory

Cons: unstable, big memory, the range of input number should be limited.

Radix sort

Radix sort is one of the derivation of bucket sort. Convert each elements into n-adic number and put each digit into the bucket.

import random

import itertools

length = 10

input_list = [random.randint(0, 99) for x in range(length)]

def radix_sort(input_list): # n = 10

for i in (range(2)): #digit is 2

bucket= [[] for i in range(10)]

for num in input_list:

index = (num//(10**i)) % 10

bucket[index].append(num)

input_list = list(itertools.chain.from_iterable(bucket)).copy()

return input_list

print(input_list)

print(radix_sort(input_list))#output [26, 4, 7, 48, 71, 31, 95, 20, 94, 55] [4, 7, 20, 26, 31, 48, 55, 71, 94, 95]

Complexity: time O(n), space O(d) as n is length of the list, d is number of digits. In the worst case, space complexity is O(d+n)

Pros: Fast algorithm, small memory, stable sort

Cons: the range of input number should be limited

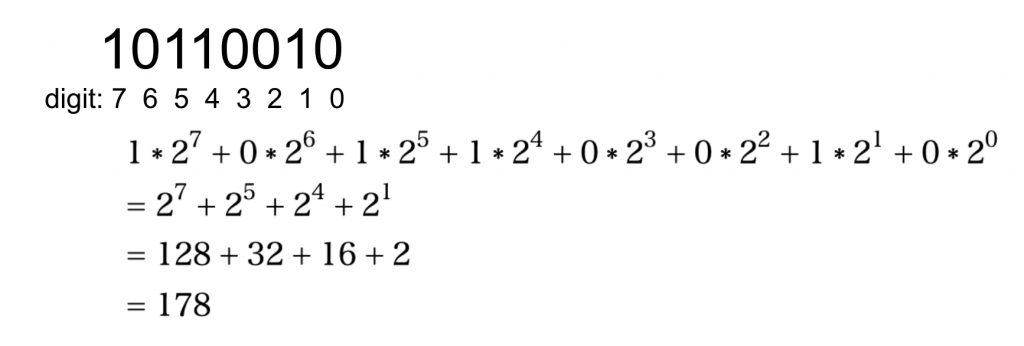

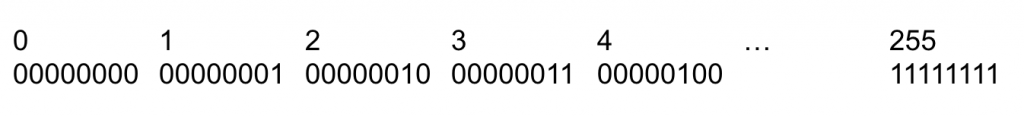

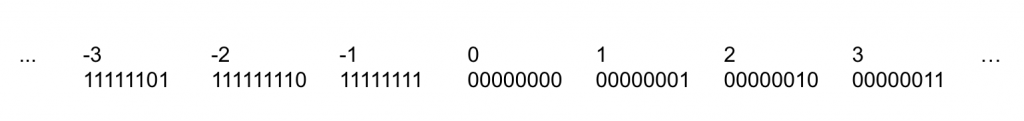

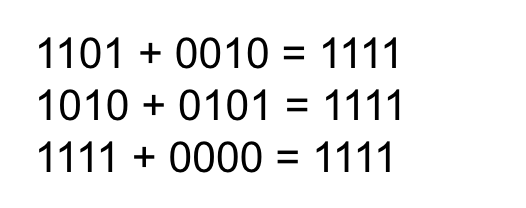

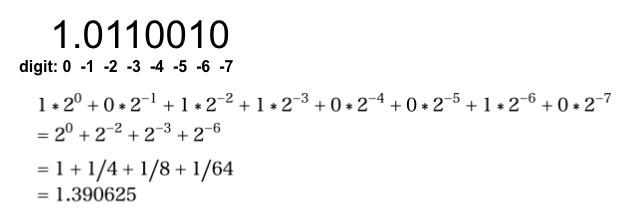

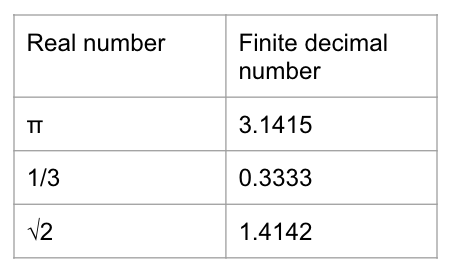

Because it takes some time to calculate division and modulo, using shift operation of binary numbers is efficient.